Understanding PDF/UA Validation Results: A Comparative Study Across Tools

ArticleDecember 26, 2025

ArticleDecember 26, 2025

About Diana Kosovac, PDFix

PDF/UA validation plays an essential role in building accessible, standards-conformant documents. We wanted to better understand how commonly used PDF/UA-1 validators report results when applied to the same set of files - and to share those observations openly with the community.

Our goal was not to evaluate or rank tools, but to compare validation outcomes and highlight where results align or differ, so practitioners can make informed choices about their own workflows.

What we compared

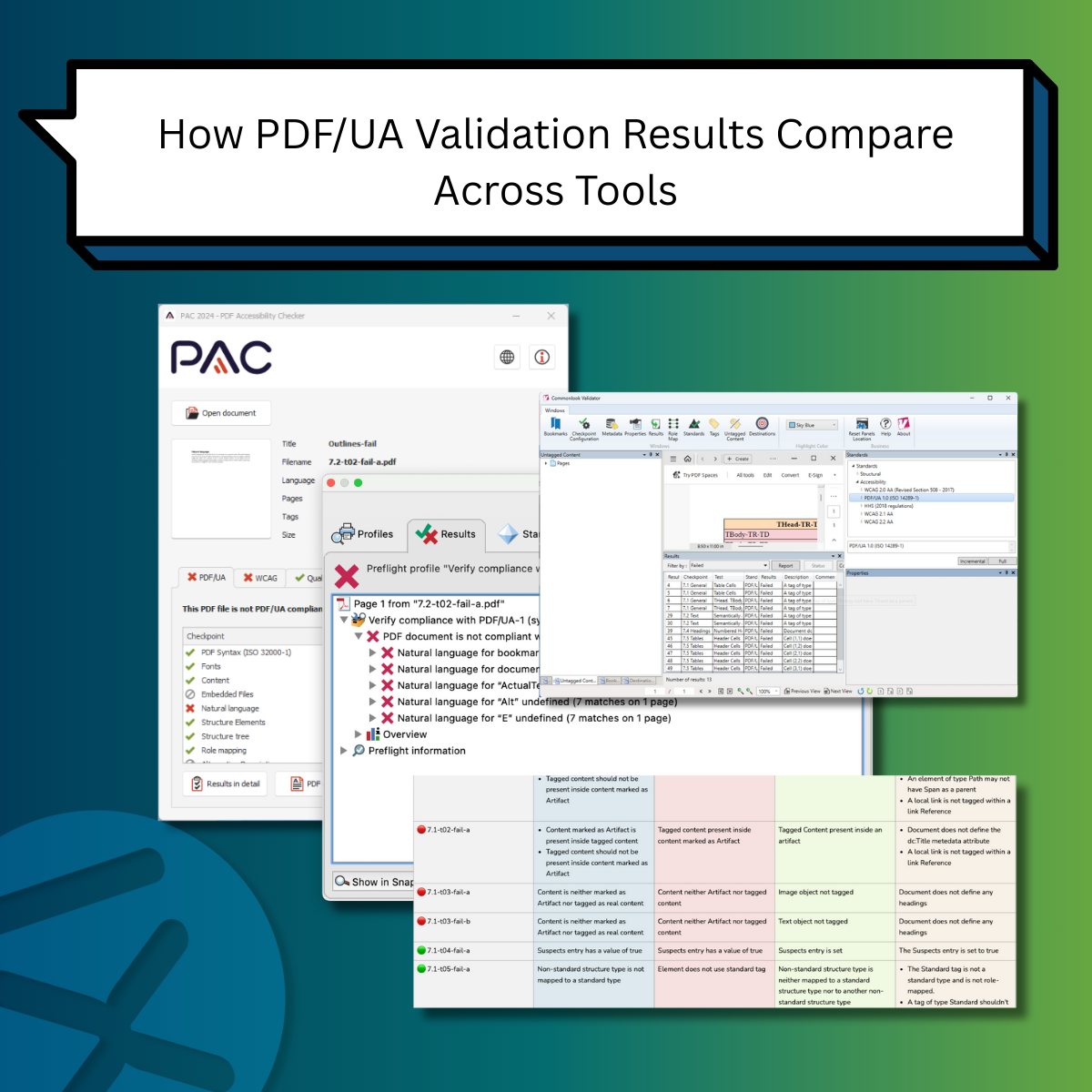

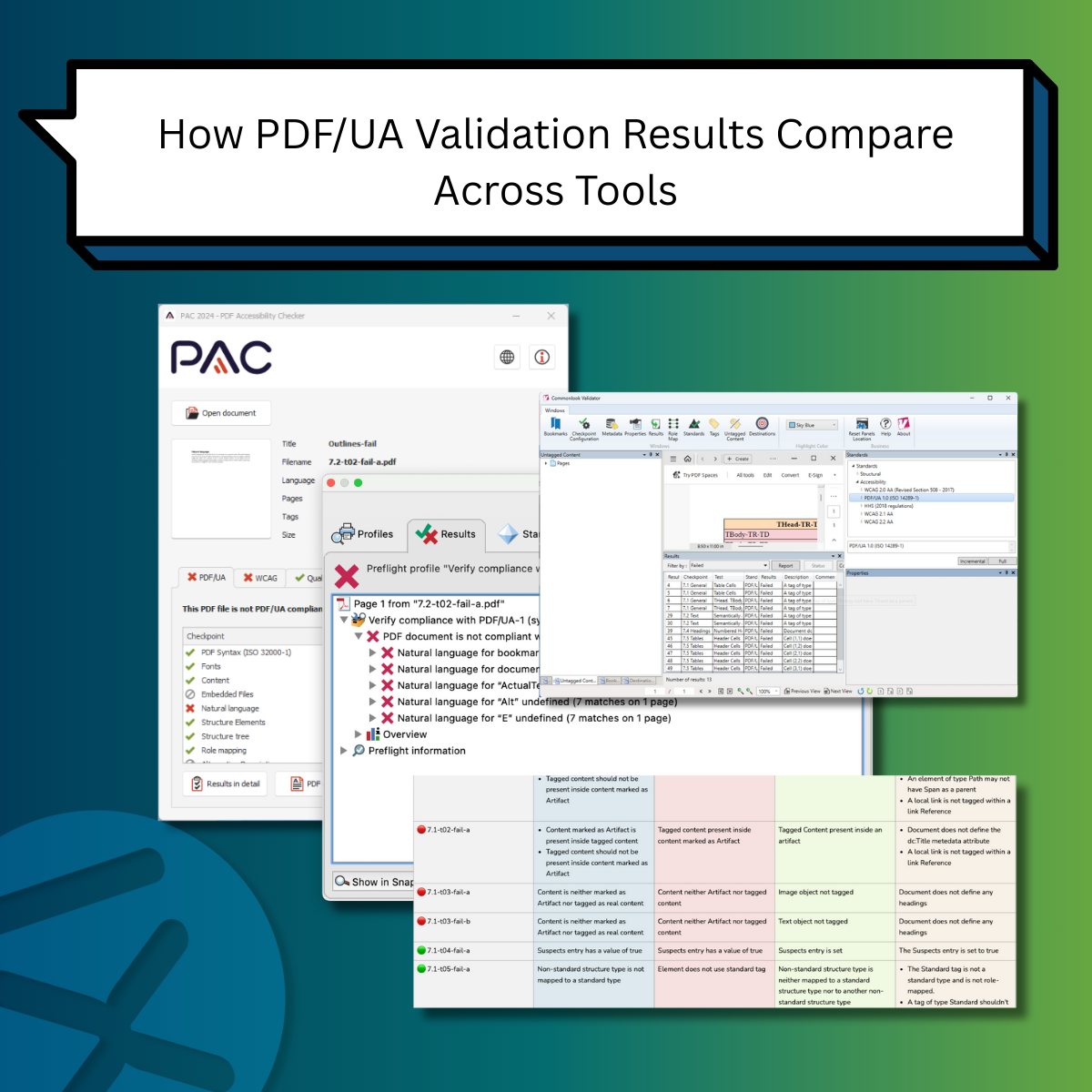

We tested 155 reference PDF files using four widely adopted free PDF/UA-1 validators:

- veraPDF

- Adobe Preflight (PDF/UA)

- PAC

- CommonLook PDF Validator

All validators were run in error-only mode, focusing exclusively on failed checks defined by PDF/UA-1 (ISO 14289-1). Warnings and advisory messages were intentionally excluded to keep the comparison consistent.

What the results show

Across the tested files, we observed three common result patterns:

- Matching results — validators reported the same issue

- Partial matches — validators identified a similar problem but described it differently

- Different results — validators reported different outcomes for the same file

In practice, this reflects the fact that validators interpret and surface standard requirements in different ways, particularly for structural elements such as metadata, tagging hierarchies, tables, and language attributes.

Why this information is useful

For PDF Association members, tool vendors, accessibility specialists, and document producers, this comparison offers practical value:

- It illustrates how validation outputs can vary, even when tools follow the same standard

- It helps teams understand why cross-checking may sometimes reveal additional context

- It supports informed decision-making when selecting validation tools for specific use cases

Importantly, these differences are a natural part of any standards-based ecosystem and reflect implementation choices, scope, and reporting approaches - not tool quality.

Explore the full comparison

We’ve published the complete methodology, comparison tables, and links to the reference test files for anyone who would like to explore the data in more detail.

Read the full PDF/UA validator comparison.

By sharing this comparison, we hope to contribute transparent, neutral insight that supports collaboration and informed choice across the PDF accessibility community.