New large-scale PDF corpus now publicly available

A developer and researcher working on PDF technologies for more than 20 years, Peter is the PDF Association’s CTO and an independent technology consultant.

The SafeDocs corpus, “CC-MAIN-2021-31-PDF-UNTRUNCATED”, is now publicly available via the Digital Corpora project website: https://digitalcorpora.org/corpora/file-corpora/cc-main-2021-31-pdf-untruncated/. This new corpus - nearly 8 million PDFs totaling about 8 TB - was gathered from across the web in July/August of 2021. The PDF files were selected by Common Crawl in its July/August 2021 crawl (CC-MAIN-2021-31) and subsequently updated and packaged by the SafeDocs team at NASA’s Jet Propulsion Laboratory as part of the DARPA SafeDocs program. Users should note Common Crawl's license and terms of use and the Digital Corpora project's Terms of Use.

The DARPA-funded SafeDocs fundamental research program began its study of file formats and protocols in 2019, with the PDF Association advising on research into parser security and enhancing trust in the ubiquitous PDF document format. Among other efforts, this work included collation and analysis of large-scale corpora of real-world PDF files to assess extant data (and thus, the state of the industry), and to assist in evaluating SafeDocs-developed technology.

Also developed under SafeDocs, the previously-announced Stressful PDF Corpus focused on errors, malformations and other stressors in parsing PDF by mining issue tracker (bug) databases of open-source PDF projects. Reactions to date indicate that this targeted corpus has been extremely valuable to the PDF development community in locating latent defects and improving PDF parser robustness. However, because of its origin in issue trackers, the “Stressful PDF Corpus” does not accurately represent PDF files in general.

Benefits

This new corpus of PDF files offers five key benefits over the publicly accessible Common Crawl data sets stored in Amazon Public Datasets:

- As far as we know, CC-MAIN-2021-31-PDF-UNTRUNCATED is the single largest corpus of real-world (extant) PDFs that is publicly available. Many other smaller, targeted or synthetic PDF-centric corpora exist, including the well-known and widely referenced GovDocs1 (a widely utilized corpus that helped establish the Digital Corpora project back in 2009).

- Common Crawl truncates all the files it collects at 1MB. For this corpus, the SafeDocs JPL team refetched complete PDF files from the original URL recorded by Common Crawl without any file size limitation, thus improving the representation of PDF files sourced from the web.

- This corpus focuses on a single widely used file format: PDF.

- For ease of use and to enrich analysis, provenance metadata is provided which includes geo-ip-location (where possible) and some high-level metadata extracted from the PDF files by the Poppler pdfinfo utility.

- All PDF files (both the original Common Crawl PDFs that were less than 1MB and the larger (and thus, re-fetched files) are conveniently packaged in ZIP archives, in the same manner as GovDocs1.

For the specific CC-MAIN-2021-31 crawl, the Common Crawl project writes:

The data was crawled July 23 – August 6 and contains 3.15 billion web pages or 360 TiB of uncompressed content. It includes page captures of 1 billion new URLs, not visited in any of our prior crawls.

Sebastian Nagel (engineer & data scientist for Common Crawl) recently analyzed the latest Common Crawl data (Jan/Feb 2023, CC-MAIN-2023-06). PDF documents made up just 0.8% of those successfully fetched records yet occupy 11.85% of the 88 TiB WARC storage, but with a very similar 25.6% of all PDF documents being truncated! A Tebibyte (abbreviation: TiB) is 240 bytes or 1,099,511,627,776 bytes.

As with any CommonCrawl results, it is not possible to determine precisely how representative this corpus is of all PDF files across the entire web, or of PDF files in general. Obviously, a very significant number of PDF files are unavailable to web crawlers such as CommonCrawl, as they lie within private intranets or repositories, behind logins or data entry forms, or are not made publicly accessible due to confidential content. Accordingly, corpora created from web crawling will not represent the actual distribution of every PDF feature or capability.

Common Crawl data can therefore be viewed as a convenience sample of the web, but with a serious limitation due to significant file truncation. The new “CC-MAIN-2021-31-PDF-UNTRUNCATED” corpus offers a more reliable large-scale sample of PDF files from the publicly accessible web and is widely applicable.

Application

PDF is a ubiquitous format in broad use across many industrial, consumer and research domains.

Many existing corpora (such as GovDocs1) focus on extant data but are now quite dated and thus no longer reflect current changes and trends in PDF. The format continues to evolve, with vendors such as Adobe releasing numerous extensions to PDF 1.7 up to ExtensionLevel 11 in 2017, and with PDF 2.0 initially released in 2017 and updated in 2020. In addition, the PDF creator and producer ecosystem has evolved substantially in recent years, with PDF creation on mobile and cloud platforms, advancements in graphic arts, illustration and office authoring packages, and wider adoption of Tagged PDF for content reuse and accessibility.

With advances in machine learning technology across multiple domains, the need for reliable larger data sets are also in high demand. If you follow PDFacademicBot on Twitter you will already know the large variety of academic domains that utilize PDF corpora!

The “CC-MAIN-2021-31-PDF-UNTRUNCATED” corpus is thus useful for:

- PDF technology and software development, testing, assessment, and evaluation

- Information privacy research

- PDF metadata analysis

- Document understanding, text extraction, table identification, OCR/ICR, formula identification, document recognition and analysis, and related document engineering research domains.

- Malware and cyber-security research. The “CC-MAIN-2021-31-PDF-UNTRUNCATED” corpus has not been sanitized so cyber-hygiene practices should always be used!

- ML/AI applications and research (document classification, document content, text extraction, etc)

- Preservation and archival research

- Usability and accessibility research

- Software engineering research (parsing, formal methods, etc.)

We encourage researchers from all domains to contact us by emailing pdf-research-support@pdfa.org when they use this new data set to ensure that results and conclusions are soundly reasoned.

The CC-MAIN-2021-31-PDF-UNTRUNCATED corpus

The new corpus is hosted in Amazon S3 by the Digital Corpora project at https://downloads.digitalcorpora.org/corpora/files/CC-MAIN-2021-31-PDF-UNTRUNCATED/.

Dr Simson Garfinkel, founder and volunteer in the Digital Corpora project, said "One of the advantages of hosting this dataset in the Digital Corpora is that it's all based in Amazon S3. This makes it easy for students and researchers to create an analysis system in the Amazon cloud and get high-speed access to this 8TB dataset without having to pay for data hosting or data transfer. This contribution of roughly 8TB of data doubles the size of the Digital Corpora, and it gives us an up-to-date sampling of PDF documents from all over the Internet. These kinds of documents usefully power a wide range of both academic research and student projects."

All PDF files are named using a sequential 7-digit number with a .pdf extension (e.g. 0000000.pdf, 0000001.pdf through 7932877.pdf) - the file number is arbitrary and is based on the SHA-256 of the PDF. Duplicate PDFs were removed from the corpus based on the SHA-256 hash. From the 8.3 million URLs for which there was a PDF file, there are 7.9 million unique PDF files.

PDF files are packaged into ZIP files based on their sequentially numbered filename, with each ZIP file containing up to 1,000 PDF files (less if duplicates were detected and removed). The resulting ZIP files range in size from just under 1.0 GB to 2.8 GB. With a few exceptions, all of the 7,933 ZIP files in the zipfiles subdirectory tree contain 1,000 PDF files.

Each ZIP is named using a sequential 4-digit number representing the high 4 digits of the 7-digit PDF files in the ZIP - so 0000.zip (1.2 GB) contains all PDFs numbered from 0000000.pdf to 0000999.pdf; 0001.zip (1.6 GB) contains PDFs numbered from 0001000.pdf to 0001999.pdf; etc. ZIP files are further clustered into groups of 1,000 and stored in subdirectories below zipfilesbased on the 4-digit ZIP filename, where each subdirectory is limited to 1,000 ZIP files: zipfiles/0000-0999/, zipfiles/1000-1999/, etc.

Uncompressed, the entire corpus occupies nearly 8 TB!

With such significant bandwidth and storage requirements, it is not expected that most users will download the entire corpus. Users may more conveniently access the corpus from its S3 buckets on AWS, or pick and choose PDFs as necessary based on the provenance and metadata indices provided, rather than downloading the entire corpus.

Provenance and PDF metadata

The corpus also includes both provenance information and PDF metadata as CSV tables that link each PDF file back to the original Common Crawl record in the CC-MAIN-2021-31 dataset, and offer a richer view of the PDF file via extracted metadata. The CSV tables are in the metadata subdirectory. (The dates in the CSV filenames refer to when the metadata was collated.)

The full corpus metadata tables are provided as three large gzipped, UTF-8 encoded, CSV tables (e.g. cc-provenance-20230303.csv.gz, 1.2 GB compressed). Small uncompressed samples of each table are also provided for convenience since the main files are too large for spreadsheet applications such as Excel. These tables include the data relevant to only 0000.zip so that users may easily familiarize themselves with a much smaller portion of the data (e.g. cc-provenance-20230324-1k.csv, only 414 KB). Note that there are 1,045 data rows in these small *-1k.csv tables because these tables are URL-based - the same PDF may have come from multiple URLs. For example, 0000374.pdf was retrieved from five URLs, so it appears five times in these tables, but the PDF is only stored once in 0000.zip.

Using the CSV tables in the metadata subdirectory it is possible to:

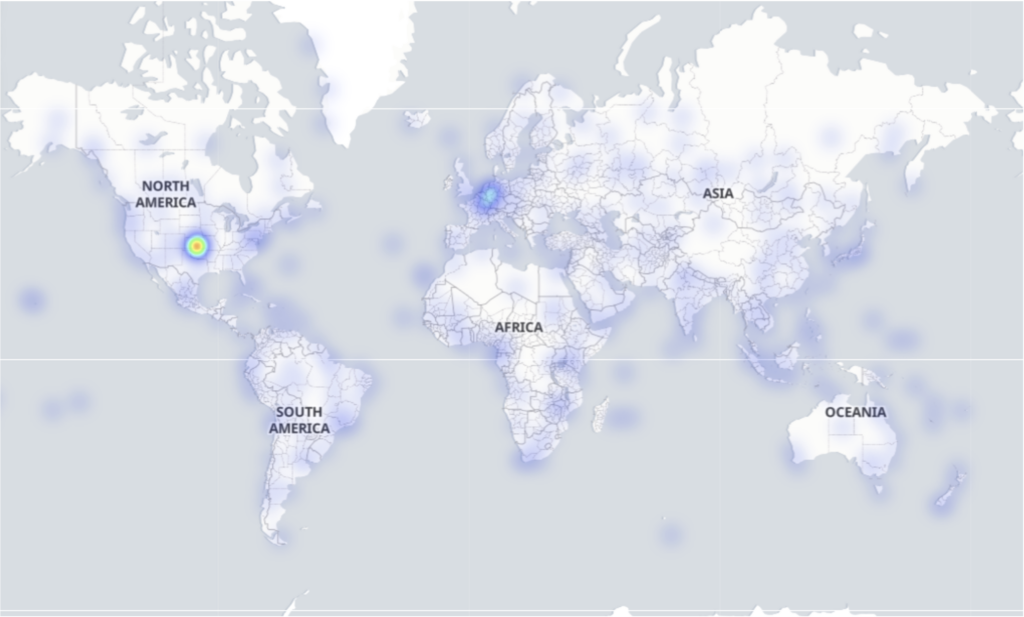

- Identify PDFs from a particular URL, IP address, top-level domain (TLD), or country - see the

cc-hosts*.csvmetadata table with columnshost,ip_address,tld, andcountry, respectively. Note that the country value indicates where the PDF file was hosted (based on IP address, geolocated by MaxMind’s geolite2), and not the content of the PDF; - Identify PDFs by a geo-location (based on IP address via geolite2) - see the

cc-hosts*.csvprovenance table with columnslatitudeandlongitude; - Identify PDFs by file size - see the

cc-provenance*.csvtable,fetched_lengthcolumn; - Identify PDFs by HTTP header MIME and/or detected MIME from the Common Crawl fetch - see the

cc-provenance*.csvtable andcc_http_mimeandcc_detected_mimecolumns; - Identify the PDF producer and creator applications from the

pdfinfo-*.csvmetadata table and theproducerandcreatorcolumns; - Identify PDF properties (such as the number of pages and the PDF header version) or high-level features such as Tagged PDF, Linearization, or the presence of JavaScript from the

pdfinfo-*.csvtable.

Due to Unicode-encoded metadata, all *-1k.csv tables have a UTF-8 Byte Order Marker (BOM) prepended so that they may easily be opened by spreadsheet applications (such as Microsoft Excel) by double-clicking, and not result in mojibake. This is because today’s Excel does not prompt for an encoding when opening CSV files directly - the prompts for delimiters and encoding only occur if manually importing the data into these spreadsheet applications.

The very large gzipped metadata CSV files for the entire corpus do not have UTF-8 BOMs added as these are not directly usable by office applications.

Detailed information on all tables is provided in the documentation at https://digitalcorpora.org/cc-main-2021-31-pdf-untruncated/.

Working with large CSV files

Both the provenance and PDF metadata tables for the full CC-MAIN-2021-31-PDF-UNTRUNCATED 8M file corpus are extremely large and beyond the capabilities of typical spreadsheet applications. Users may find it easier to either import the CSV tables into a relational database (using the url_id column as a key) or use Linux console command line utilities such as the EBay TSV utilities or GNU datamash (also used with the Arlington PDF Model):

# Convert each CSV table to TSV format using EBay TSV Utilities $ csv2tsv cc-hosts-20230324-1k.csv > cc-hosts-20230324-1k.tsv $ csv2tsv cc-provenance-20230324-1k.csv > cc-provenance-20230324-1k.tsv $ csv2tsv pdfinfo-20230324-1k.csv > pdfinfo-20230324-1k.tsv # Find what PDF versions that are present (pdf_version is field #7 in pdfinfo table) $ cut -f 7 pdfinfo-20230324-1k.tsv | sort -u 1.1 1.2 1.3 1.4 1.5 1.6 1.7 pdf_version # Do a simple frequency count for each PDF version using GNU datamash (blanks = errors) $ datamash --headers --sort groupby 7 count 7 < pdfinfo-20230324-1k.tsv GroupBy(pdf_version) count(pdf_version) 4 1.1 1 1.2 10 1.3 85 1.4 290 1.5 262 1.6 113 1.7 280 # Find what non-zero page rotations are present (page_rotation is field #20 in pdfinfo table) $ cut -f 20 pdfinfo-20230324-1k.tsv | sort -u 0 270 90 page_rotation # Extract all the PDF metadata about PDFs with non-zero page rotation on page 0. # Because some PDFs have a blank entry (due to error, etc) a string search is done $ tsv-filter --header --str-ne page_rotation:0 pdfinfo-20230324-1k.tsv url_id file_name parse_time_millis exit_value timeout stderr pdf_ve.. 3821405 0000008.pdf 226 0 f 1.3 ApeosPort-IV C2270 5471541 0000031.pdf 333 0 f 1.4 PageMaker 6.5 Acroba… 5144211 0000042.pdf 61 0 f 1.5 PScript5.dll Version 5… 64841 0000058.pdf 64 0 f 1.6 Adobe Acrobat 10.1.8 8161461 0000091.pdf 78 0 f 1.6 PScript5.dll Version 5.. 6520824 0000095.pdf 66 0 f 1.4 2021-0… 6084861 0000258.pdf 25 0 f 1.7 Adobe Acrobat 18.9.0 3154244 0000300.pdf 15 1 f Command Line Error: Incorrect password… 6590720 0000387.pdf 38 0 f 1.4 Adobe Illustrator(R) 1… 5472057 0000398.pdf 17 0 f 1.6 Canon iR-ADV C2230 PD… 4797702 0000399.pdf 82 1 f Syntax Error: Couldn't find trailer di… … # Pretty-print TSV $ tsv-pretty cc-hosts-20230324-1k.tsv url_id file_name host tld ip_address country latitude … 6368476 0000000.pdf augustaarchives.com com 66.228.60.224 US 33.7484… 5290472 0000001.pdf demaniocivico.it it 185.81.4.146 IT 41.8903… 983569 0000002.pdf www.polydepannage.com com 188.165.112.24 FR 8.8581… 88000 0000003.pdf community.jisc.ac.uk uk 52.209.218.96 IE 53.3382… 1883928 0000004.pdf www.molinorahue.cl cl 172.96.161.211 US 34.0583… 1524741 0000005.pdf www.delock.de de 217.160.0.15 DE 1.2993… 6034545 0000006.pdf detken.net net 144.76.174.86 DE 47.6702… 2902384 0000007.pdf www.acutenet.co.jp jp 210.248.135.16 JP 35.6897… 3821405 0000008.pdf yokosukaclimatecase.jp jp 157.112.152.50 JP 35.6897… … # Count number of rows where PDF was hosted in Japan (JP) $ tsv-filter --header --str-eq country:JP cc-hosts-20230324-1k.tsv | wc -l 36 # Show rows where PDF was hosted in Japan (JP) $ tsv-filter --header --str-eq country:JP cc-hosts-20230324-1k.tsv url_id file_name host tld ip_address country latitude longitude 2902384 0000007.pdf www.acutenet.co.jp jp 210.248.135.16 JP 35.6897… 3821405 0000008.pdf yokosukaclimatecase.jp jp 157.112.152.50 JP 35.6897… 7021015 0000025.pdf kanagawa-pho.jp jp 60.43.148.215 JP 35.6897… 2881847 0000081.pdf a-s-k.co.jp jp 163.43.87.179 JP 35.6897… 450918 0000117.pdf matsumurahp.server-shared.com com 211.13.196.134 JP … 2440192 0000121.pdf shiga-sdgs-biz.jp jp 183.90.237.4 JP 35.6897… … # Export rows where the PDF was hosted in Japan (JP) to a new TSV file $ tsv-filter --header --str-eq country:JP cc-hosts-20230324-1k.tsv > jp.tsv # Locate the provenance information for all PDFs hosted in Japan (JP) $ tsv-join --header -f jp.tsv --key-fields url_id cc-provenance-20230324-1k.tsv url_id file_name url cc_digest cc_http_mime cc_detected_mime… 2902384 0000007.pdf http://www.acutenet.co.jp/image/fukkou/070.pdf MDWS3PDB5BXLPPATHTCD7Q7… …

Note that the console output shown above has been reduced in width and length - a wide monitor is recommended! For more complex data queries, using a relational database and SQL is also highly recommended.

Conclusion

The use of files from raw Common Crawl data sets cannot always drive useful extrapolations for engineering or research purposes due to CommonCrawl’s file truncation at 1MB which is especially problematic and unrealistic for PDF files. The new CC-MAIN-2021-31-PDF-UNTRUNCATED corpus from SafeDocs and publicly hosted by the Digital Corpora project overcomes this significant limitation, allowing developers, engineers and researchers across all domains to better understand the results they obtain from testing web-sourced PDFs at scale while also ensuring their experiments are entirely reproducible.

Acknowledgements

The PDF Association thanks the NASA/JPL team for developing this data set and to Simson Garfinkel and the Digital Corpora project for its publication. We are also grateful to the Amazon Open Data Sponsorship Program for enabling public access to this large corpus to be free and publicly available, as part of their ongoing Digital Corpora project sponsorship.

This material is based upon work supported by the Defense Advanced Research Projects Agency (DARPA) under Contract No. HR001119C0079. Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the Defense Advanced Research Projects Agency (DARPA). Approved for public release.